This is an essay about artificial intelligence, but I am going to begin with orcas.

An orca is an apex predator. It takes from the sea what it needs to live — salmon, tuna, seals, and so on. It is a beautiful animal, as well as intelligent and fearsomely efficient. But as intelligent as it may be, it does not invent a trawler that rakes the ocean floor, destroying the habitat of countless creatures that it doesn’t even eat.

The kind of power that humans exercise, through our use of tools, is different in both scale and quality than the hunting behavior of an orca, or tiger, or any other apex predator.

The orca has neither the means nor the inclination to destroy the complex network of life upon which it depends. Homo sapiens stands alone in this regard. No animal besides us has ever had the ability to decimate the biosphere itself.

Maybe this is what the biblical phrase dominion over the earth means. It is not, in my view, such a good thing.

For most of human history, we also did not have this ability. But we have it now. Our ability to do harm — to other humans and all creatures — has been accelerating since the beginning of the industrial revolution. The speed of that acceleration increased exponentially with the invention of the atomic bomb around 70 years ago.

With the development of artificial intelligence, the acceleration of technology — and particularly of technology that has the capacity to make our civilization self-terminating — has reached the stage where, on a graph, the curve goes nearly vertical. In our cleverness, we have created tools of such power that they can fundamentally alter the planet itself.

These tools have the bizarre capability to improve themselves, without our input. While the power of these tools is increasing at a rate we can barely comprehend, what has not kept pace is our capacity to exercise wisdom, restraint, and discernment in the use of such tools, as we make decisions regarding how we will live in relation to each other and to the rest of the living world.

A couple of nights ago, I was listening to an interview with the social philosopher and systems thinker Daniel Schmachtenberger. It was a long and wide-ranging interview, and at one point he was talking about ways in which past powerful civilizations crashed and burned.

Throughout history, civilizations that have attained great power have also been self-terminating. Even when they were conquered by an outside force, their downfall was partially or mostly because they were ripe for collapse.

The reasons are various, but also similar from case to case: They ruined their land, depleted their resources, overextended their reach, exploited their workers, corrupted their own institutions, or exhausted the morale and good will of their own citizens.

He asked a question that slipped under my skin like a splinter: “Why have we humans not been good stewards of power?”

I can hardly think of a more important question.

It’s interesting that Schmachtenberger used the word stewards in framing his question. Implicit in that word steward is the assumption that we are responsible to someone other than ourselves. We are, in other words, caretakers. We bear responsibility for the well-being of others.

To whom might we be responsible? Our children and grandchildren? Our communities? Does it go beyond that, to all humans? Or to other living creatures? Might we bear a responsibility to a deity? Or to a river that provides us with water, or to the soil from which our food grows?

Of course, if we are responsible to no one, then the future beyond our own short lives need not concern us. But most people I know are not content with that. Even if we draw our circle pretty tightly, unless we are soul-dead, most of us care about someone besides ourselves.

We all may have different notions regarding how far our responsibility extends, but I suspect most of us feel a responsibility toward our most direct descendants, at least. Our children, our grandchildren.

In this context, the word responsibility may seem a bit stiff; what we more often call it is love.

How do we become better stewards of power? Or, to frame the question a bit differently: How do we find and channel the power to become better caretakers of that which we love?

I believe that we will not be able to navigate the coming years without a substantial shift in how we exercise power — both in regard to how we treat other humans, and in how we treat the other living beings that share this planet with us.

This has everything to do with artificial intelligence.

Let me offer a brief introduction to Daniel Schmachtenberger, although it will be inadequate. I’ll start by stating that for anyone interested in how we might navigate our way through a perilous future, he is worth listening to.

In speaking to friends, I’ve described him as a social philosopher and a systems thinker. I’m not at all sure that this is how he would describe himself. In addition to those descriptors, I’d also add risk analyst. Maybe the best thing to call him is “thoughtful dude with a good heart.”

By risk analyst, I don’t mean what an insurance adjustor or stock broker does. Think bigger than that. Think about species survival. Think about ecological and civilizational collapse. Think existential risk.

Simply put, an existential risk is something that could spell disaster for everyone, everywhere.

(For example: Climate change and all of its subsidiary effects, runaway artificial intelligence, the unravelling of both ecosystems and social systems, loss of biodiversity, nuclear war, and last but not least, an economy based on limitless growth taking us past planetary limits.)

In examining these issues, Schmachtenberger often employs concepts of game theory, which is a branch of philosophy that can apply to poker, fantasy football, or even flirting with someone in a bar — but in a wider sense is about how people make choices, and how they weigh the benefits and costs of those choices.

He thinks a lot about three interlocking questions: What kind of predicament are we in? How did we get here? Where are we headed? One of my favorite quotes of his is “If we do not immediately and completely change our direction, we are likely to end up where we are going.”

Let that one sink in.

A concept from game theory that Schmachtenberger often works with is the idea of multi-polar traps. A multi-polar trap is any kind of situation in which people acting in their own self-interest make choices that harm the wider community — including, in the long run, themselves.

Multi-polar traps are characterized by a lack of trust, transparency, and coordination. A classic example from ecology is the tragedy of the commons, in which people deplete a resource for short-term gain, rather than cooperating to find a level of sustainable use that insures the resource will be available to them (and their children) in the future.

As in “Damn! No more fish in the lake! How come we didn’t see that coming?”

A classic example from social science is the prisoner’s dilemma, in which two people driven by self-interest and distrust of each other fail to behave in a way that gives the best outcome to them both.

Also, arms races in general are variations of a multi-polar trap. This includes the theory of deterrence through escalating retaliation. It may work for a while, but when it fails, it can fail catastrophically.

In a multi-polar trap, what benefits the individual (or small group) in the short-term actually harms everyone in the long-term. Thus it is that we are seduced into going down a path that leads us all to a destination no one really wants to arrive at.

(Who among us actually wants ocean acidification? Mass extinctions? Depleted topsoil? Stronger hurricanes? Who wants the ocean to hold more plastic than fish? And yet we relentlessly persist in our march toward these outcomes.)

One way to think about it: We are trapped in patterns of behavior that are self-destructive. Why? Part of the answer is that we operate within systems and institutions that are, at best, poorly designed, and at worst, downright sociopathic.

If we look for technological solutions to our problems without changing the underlying behavioral patterns that brought us to where we are, we are likely to find those very technological solutions contributing to the problems. This is because the self-destructiveness lies less in the technology itself than in the incentives that determine its use.

In addition to multi-polar trap, Daniel Schmactenberger uses another ten-dollar term: The meta-crisis. Consider this a fancy phrase for the crisis behind the crisis.

Think not of a single problem — like climate change, or nuclear war, or topsoil depletion — but rather of the recurrent patterns that give rise to our self-destructive behaviors that lead to such problems.

There are several existential threats that face humans (not just Americans) in 2024. These threats are intertwined, and they amplify each other. They can’t be solved separately; there needs to be a coordinated, whole-systems approach.

We won’t make much (if any) progress in addressing these threats as long as we operate within systems — economic systems, political systems, religious systems — that offer perverse incentives and reward destructive and exploitive behavior.

Daniel Schmachtenberger (and others) apply the principles of game theory to the open question of whether or not humans can survive our own cleverness at using tools, and our own insatiable desire to dominate one another and gobble up the rest of the living world.

When I was a teenager, the existential threat we (sometimes) worried about was nuclear war. These days, nuclear war is just one of several existential threats, and not even the one that seems most likely. Climate change and all of its subsidiary effects comes immediately to mind, of course.

And then there are ecological dominoes falling that are spoken of less often — things like topsoil depletion, dead zones in the ocean, persistent toxins in the water we drink and the air we breathe. Perhaps most disastrous: Loss of biodiversity, from insects on up. (It turns out that insects are a lot more necessary for the continuation of life as we know it than we are.)

At the intersection of environment and technology, disturbing new possibilities lurk: Engineered (or resurrected) viruses can be used as bio-weapons. The same techniques of genetic modification that can be used to enhance the nutritional value of a grain of rice can be used to make seeds sterile, so that they must be purchased, yearly, from corporations.

Are we not the masters of the world? Can we survive the bitter fruit of our own brilliance? How shall we live with ourselves?

A paradigm shift has to occur if we are to make it beyond the next couple of decades.

That paradigm shift has to involve how we understand and apply power — not only among our fellow humans, but in relationship to all other creatures. What sort of power destroys the possibility of a livable future, and what sort of power can bring us into a livable future?

In the realm of technology, who wants immensely powerful and insidious artificial intelligence, developed by people who do not have our best interests at heart, to shape our reality? Who wants a media landscape in which no one can distinguish accurate reporting from deep fakes?

Who wants a society in which people are hopelessly confused, outraged, manipulated, and fearful?

Nevertheless, we are headed, lemming-like, into that very future. “If we do not change our direction, we are likely to end up where we are going.”

We need better route-finders. Where will we find them?

I think it is best that we not give the task to machines — or to sociopathic billionaires, or authoritarian strongmen, or ambitious politicians, or the wizards in silicon valley who blessed us all with algorithms that “maximize engagement” on social media, regardless of the effect these algorithms have on the health of our society.

It is a route-finding task for all of us, guided by our meager organic intelligence.

And motivated by our sense of love, tenderness, and responsibility to those who live downstream from us and will inherit the mess we’ve made of the world.

In the popular imagination, the AI threat seems to be the specter of a super-computer run amok, a kind of overlord who either enslaves or eliminates us. I don’t know how likely this is. Some thinkers in the field of AI are concerned about it. It’s not really my primary worry.

The moment at which we are eclipsed by our machines is often referred to by the somewhat mystical term The Singularity. The word seems to be uttered with either a sense of doom or a sense of deliverance, depending on one’s view of AI. (As a recovering evangelical, I can’t help but feel a familiar vibe, as if The Singularity might be a technological equivalent of The Rapture.)

A slightly different concern is that AI will be so freakishly efficient at the tasks we give it that we will be sorry to get what we ask for. Imagine Mickey Mouse in The Sorcerer’s Apprentice, unable to control the out-of-control brooms.

(For an amusing thought experiment, google “AI and the paper clip problem”.)

As dramatic as these scenarios are, they do not express my main concern about AI. I think that long before it turns us into slaves, or simply wipes us out, or turns the world into paper clips, it is likely to be harnessed by a small group of humans driven not by the desire to shape a better and more sustainable future, but rather to gather wealth, power, and control for themselves — at the expense of the rest of us.

The same old goal, but on steroids.

It’s far more banal than Total Domination. It is likely to be, simply, the accumulation of money and influence. But banal or not, with AI at their disposal, they will be ruthlessly efficient. One of the first effects of AI may be the devaluation and impoverishment of workers whose skills will no longer be considered relevant. It is hard to predict just who these people will be.

An awful lot of rapacious human behavior and undoable damage — assisted by AI — can occur long before we reach The Singularity. AI can (and will) be directed toward more effectively exploiting and manipulating both humans and non-human nature, turning everything under the sun into… well, not paper clips necessarily, but some sort of a commodity.

If AI is developed and funded by people who seek market (or military, or electoral) advantage over their rivals, it will amplify all of the other already-present existential threats. It is an accelerant, like gasoline on a fire.

And there is my concern, in a nutshell: AI is an accelerant to every other existential risk.

The last thing we need, in 2024, is an accelerant. In addition to turbo-charging exploitation and manipulation, AI can accelerate every variety of arms race, including the development of biological weapons, surveilance tools, drone weapons, and cyber-attacks. The right to privacy is already on thin ice; with AI it will be drowned.

And let’s not forget propaganda: AI will shape what we see on our screens, and people will be deceived by disinformation more effortlessly and more easily than we already are. Convincing ‘deep fakes’ may render the idea of visual and auditory recorded evidence meaningless.

It will not surprise me if this AI capacity is used extensively within a year’s time, affecting both electoral and judicial processes.

If our primary way of apprehending truth is through our screens, we are in deep trouble.

Yes, I’m painting a grim picture. Too grim, perhaps, in the view of AI apologists.

After all, couldn’t AI be used to do marvelous things, like cure cancer, design resilient and adaptive cities, more quickly deliver us from fossil fuels, improve the efficiency and reliability of supply chains, or find an alternative to plastic?

Well, sure it could. I hope it will. All technology is available for multiple uses.

A claim I often hear is that AI, like any tool, is morally neutral. I’m not sure that I accept that claim, but I won’t argue the point here. Instead, I will make two observations: First, AI is likely to be used in any way that it can be used. And second, it is generally quicker and easier to unravel a rug than to weave one.

If AI can be used to design more efficient supply chains, for instance, it can also be used to disrupt them. If it can be used to cure a virus, it can be used to create and spread one as well. To what use shall AI be put? This question begs others: Who has the power to determine its use? Who has the wisdom? Are the power and the wisdom in the same hands?

Consider this: AI promises to deliver nearly unlimited power to the relatively small number of people who are at the spearhead of its development and deployment. Who are these people?

Well, I guess we don’t know, for sure. But they are embedded in one of three places: they work for corporations like Google and Microsoft, or are in the employ of obscenely wealthy individuals, or they are in the security/intelligence apparatus of any nation state that has the budget and the expertise to pursue the research. In all three settings, no doubt, there is a race to develop and deploy AI before adversaries/competitors do so.

Whether the goal is to increase market share or to have the most advanced weaponry, the imperative is to win against the competition. So… don’t expect cautious restraint to govern the development of AI. There is no incentive to show restraint. Those who show restraint lose the race. Your competitors (or enemies, if you want to use the word) will move ahead of you.

It doesn’t matter how many respected scientists and philosophers sign a letter warning of the dangers and urging caution.

Along with restraint, both trust and transparency will also be sacrificed. Between governments, between corporations, there will be secrecy rather than coordination. It should go without saying that the general public will not know what is happening. Most of us won’t ever know the extent to which AI manipulates us.

What sort of hunger drives people to win when the cost of winning is perhaps greater than anyone (everyone) can afford to pay? Liv Boeree, a professional poker player, has valuable insights about game theory, zero-sum games, perverse economic incentives, and how these intersect with the pursuit of power through artificial intelligence.

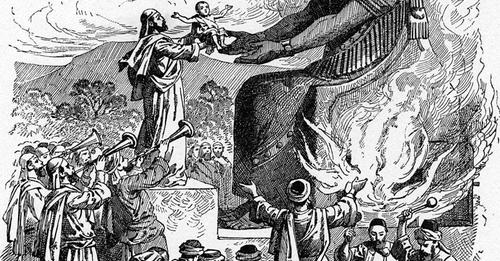

Boeree has memorably personified this hunger as Moloch, which she characterizes as the god of unhealthy competition. Historically, Moloch was the insatiable bull-headed god of the ancient Canaanites, who supposedly granted success and power to his worshippers — in exchange for the sacrifice of their own children.

It’s a pretty damn good metaphor.

All technologies can be (and will be) used for conflict-oriented purposes. AI will, as well. It will be used for purposes of manipulation and disinformation. It will be used to gain political, military, or market advantage.

In other words, it will be used to confer power to some people and to erode the power of other people. And in this regard, it will be more effective — by many orders of magnitude — than any technology in history.

I’m not really sure why I used future tense in the preceding paragraphs.

As we move into exponentially more powerful tech, we can’t continue to use it with the types of conflict-orientation we have used up till now. We can’t continue to use it in a way that is governed by an understanding of our life together as a zero-sum game in which some people win at the expense of others.

Understand: when I say we can’t do this, I mean we can’t do this and expect a livable future for the majority of humanity.

It is perfectly evident that some people think we can do this, and they are already doing it. They intend to be the winners at the game. My contention is that these people are willing to sacrifice a livable future for their own children (and yours) in order to win.

All of the tech we use in some way or another externalizes harm–on other (usually poorer and more vulnerable) people, on other creatures, on the atmosphere, on the oceans. It takes only a little bit of self-reflection to understand that this harm rebounds, eventually, to ourselves.

Think, for a minute, of the rapid pace of AI development. Now think of the next decade, and the capabilities of this AI deployed for the purposes of the authoritarian leaders that seem to be consolidating their grip all over the globe, like mold overcoming a loaf of bread.

But sure, AI might be used to find a cure for cancer, or find an alternative to plastic.

Whether Google is calling the shots, or Microsoft, or the Pentagon, or bunker-boy billionaires, or the security forces in any number of countries, AI will be put to the service of its developers. (At least initially. At some point, it may slip away from their control and become accessible to any genius in a basement who has the skills to use it.)

But in the meantime, what are the goals of its developers? While the goals of a corporation and the goals of a national government are not quite the same, the word domination applies to both. And that right there is the heart of the problem. It is a behavioral problem, rather than a technological one.

My understanding of how large-language AI models work is that they essentially teach themselves how to converse (and behave?) by examining a huge data set of information, primarily (but not necessarily limited to) what is available on the internet. Given how people behave on the internet, this should not give us comfort.

It may be that we can develop AI in a way that is more likely to bring about positive outcomes, but that seems unlikely unless we first change ourselves. After all, we are the data set.

No doubt, I’ve just oversimplified what they do, but the concept I’m getting at is this: AI is mimetic. It seems to me that the process is computational, not creative. Put another way: It does not seem self-directed. It lacks consciousness, will, self-awareness. It is not reflective. Right?

Right?

The more an AI ‘teaches’ or ‘improves’ itself, the greater its reach. How large can the data set become? Possibly everything ever written or preserved on a computer. An AI could, for example, quickly digest everything written by or about Tolstoy, and then generate new writing that captures the style (and presumably the values) of Tolstoy.

It may not feel what Tolstoy felt, but perhaps it can make decisions as if it felt what Tolstoy felt.

Here are some of my curiosities:

Could AI be ‘trained’ to not only understand humility, but to demonstrate it?

Could it be taught not only the concept of restraint, but the practice of it?

Could it be taught wisdom, (which is, of course, not the same as knowledge)?

To many people, these questions will seem to be odd, maybe even absurd. But I think we had better seriously consider them.

Could AI fully grasp — and not only grasp, but apply to its own behavior — the teaching of Socrates that the acceptance of one’s own limitations is the beginning of wisdom?

As any reader can tell, my disposition toward AI is not favorable. My worries weigh more than my enthusiasm. But the preceding questions are not rhetorical ones. I honestly don’t know the answers.

Could AI be used to nurture and empower people, rather than dominate, manipulate, deceive, surveil, or replace them?

On the surface, this may seem to be a question about AI, but really it is a question about us.

It’s a question about incentives.

Unless we thoughtfully shape the underlying incentives that govern its development and use, AI will be an accelerant to every variety of existential risk.

In the end, the underlying question is more about us than it is about AI: How do we become better caretakers of that which we love?

It’s a human task. It’s not a task we can outsource.